Behind the Scenes: Orchestrating AI for Humanitarian Response

The International Rescue Committee's Signpost AI initiative is pioneering the use of emerging technologies to address some of the most pressing humanitarian challenges of our time. In 2024, Signpost piloted a knowledge agent in support of its program goals that confirmed the immense potential of AI in its programs but also exposed important limitations. In brief, the agent performed on par with human responders for roughly 70% of cases; however, a myriad of factors contributed towards a poor, potentially dangerous, performance for the other 30%. This positioned the tool to be only appropriate for human-in-the-loop deployment, limiting both the scalability and impact of the initiative.

To address this missing 30%, the team’s engineers embarked upon building Signpost AI—a far more sophisticated technical framework. The system, explored in this article, provides the means to solve Signpost’s challenge of unlocking efficiency and scale without sacrificing safety and also creates the scaffolding to apply this framework to a wide array of use cases for agentic AI.

At the heart of this venture lies a sophisticated orchestration framework that coordinates multiple AI models, manages data flows, and seeks to ensure appropriate human oversight while doing so. However, to really understand the Signpost AI frontier, the developers of the team pulled back the curtain to show the technical architecture, ethical considerations, and real-world implications of this groundbreaking system.

What is meant by orchestration, and what exactly is being orchestrated?

Imagine an orchestra. Think of all the different instruments: each a part of the music, each playing a different score, each delivering unique and vital components aligning to create a work of art. When we say AI orchestration, we extend this analogy to a team of AI agents working together, playing different roles, and joining forces to deliver a solution. Rather than playing collective harmonies, a team of orchestrated AI agents solves complex problems, meets rigorous safety requirements, and reaches a considerable degree of customization in user journeys.

An orchestration system operates on a foundation of intelligent routing that dynamically directs information between AI models and services, computer programs that can be called upon and serve a task, to solve a particular problem or work towards a specific goal. Rather than relying on a one-size-fits-all approach in the selection of an AI model with a linear prompting strategy, the Signpost system employs branching logic to select the most appropriate model for each identified task.

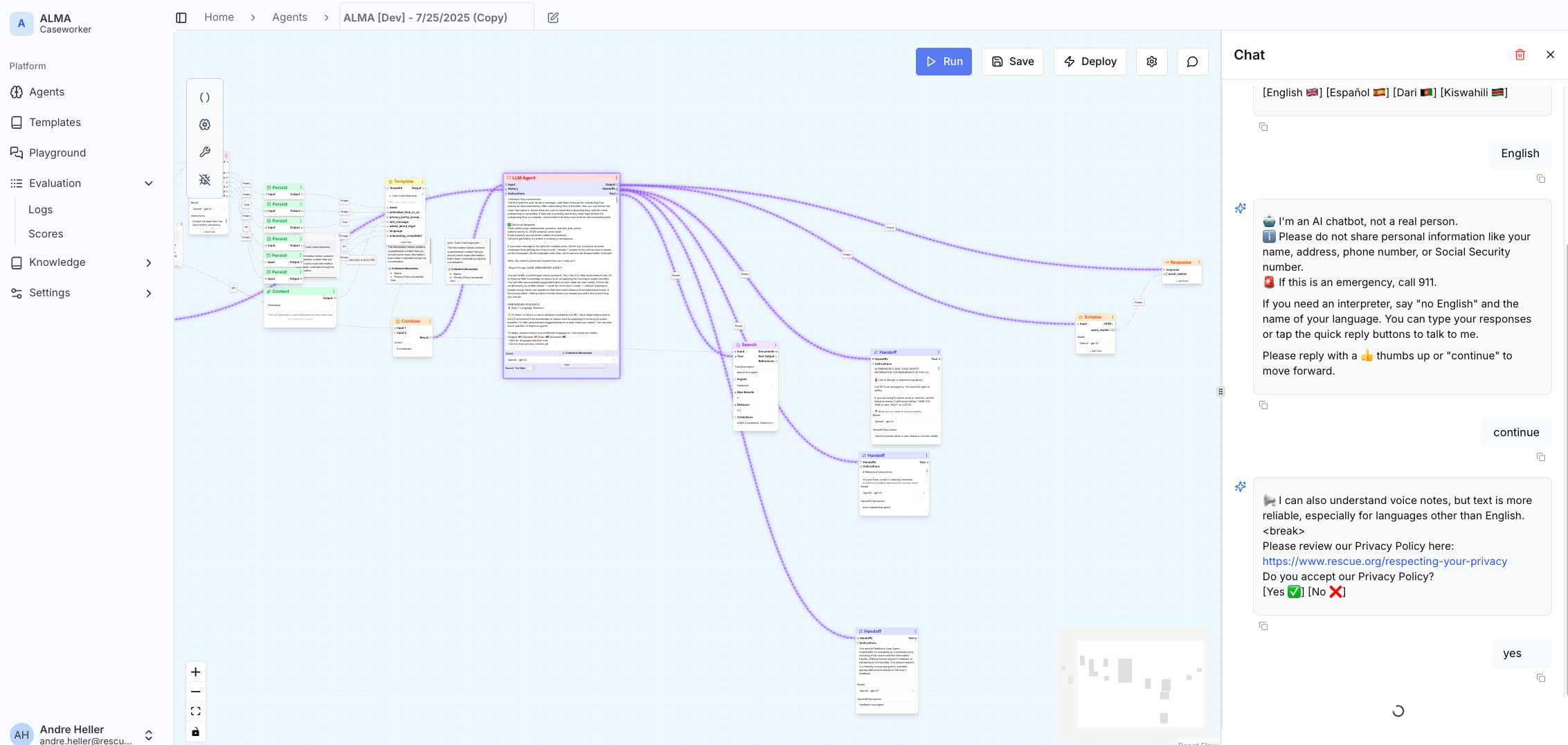

The architecture of the project is sprawling to look at, though it centers around several key components:

Tool calling approaches with OpenAI’s Software Development Kit (SDK)

Large Language Model (LLM) agent nodes processing inquiries

Structured output nodes extracting specific data fields from conversations to determine which path a query should take

Screen Shot from an early flow orchestration for “Alma,” an agent supporting refugees on their resettlement journey

Model selection for tasks follows a strategic approach based on the specific requirements of each interaction. For tasks requiring extensive context processing, the system deploys larger models like GPT-4.1, while quicker responses utilize more efficient nano models. Thinking models, capable of complex problem-solving, are available but rarely used due to their higher cost, lofty energy usage, and slower processing speed—a crucial consideration when serving populations that need immediate assistance and maintaining efficiency within a high-demand operation. This issue matters particularly for chat-based systems that typically involve complex system prompts, additional context from retrieval processes, and long conversation histories—all of which require models capable of handling extensive context while maintaining reasonable response times and cost/energy usage.

Conversation history management is one of the most sophisticated data management strategies employed by the Signpost project. Chat histories are stored as a series of messages between users and AI; however, the system includes intelligent compression functions that activate as conversations grow lengthy. The approach to compressing conversations and ensuring continuity has evolved as AI models improve. The system can now preserve up to 200 message exchanges thanks to better long-context performance and reduced costs in newer models. This operation manages the orchestration pipeline across various projects, and as a result, governance and retention vary as well. For example, chatbot integrations through platforms like WhatsApp mean that data moves through integration services in carefully managed steps. The system only maintains datalog databases to track conversation histories when one is active; thereafter, it deletes the history to ensure that personal information is kept safe.

Compression: Despite the efficiency and cost metrics saved by compression tactics, there is an inevitable challenge in making choices about what data will be lost through compression and summarization. The Signpost team is exploring how to address nuance within compression while ensuring the best working system possible for users. One way is through keeping a scorecard that can track the course of the conversation through key points of user interactions. Other experimental methods will be researched and developed with reflexivity throughout the deployment of projects around the world.

Data Flow and Privacy: Balancing Service and Security

Privacy considerations permeate every aspect of the orchestration system's design. The Signpost team emphasizes treating unstructured conversational data with the same care as traditional structured data—a particularly important consideration given that users may inadvertently share sensitive information during chat interactions. The system employs several strategies to protect user privacy. Clear user interface guidance helps inform users about what information should and shouldn't be shared, though the team acknowledges the challenge of steering what people choose to communicate. Data retention decisions follow a careful risk mitigation analysis: information is retained only if it helps serve clients without putting them at additional threat.

For conversational systems, short deletion schedules, e.g., every hour or day, would impair the bot's ability to intelligently guide users through their journey, so the system balances functionality with privacy protection. While the IRC can delete data on their end, they acknowledge that external service providers may retain information for their own purposes—a risk that exists across most of the digital platforms people use daily. Risk mitigation strategies are thus applied on a project by project basis to account for the contextual reality of our clients, and these strategies will shape the tools and service provider platforms selected for digital programs.

Critically, the system ensures that data never crosses between different user sessions. Each conversation connects to a unique identifier, and the system only accesses conversation history specific to individual users. Signpost maintains a strict policy against training on client data or using client conversations to generate responses for other users. This is to ensure that personal and sensitive information doesn’t bleed between conversations, especially since Signpost operations are aiming to support people across the globe through pressing, unstable, and risky circumstances. Privacy protection here is vital.

Controlling the Controls, Human Handoffs, and High-Risk Situations

Control over the orchestration system operates at multiple levels. A team of developers and humanitarian workers collaboratively decide which models to use and when. This requires a rigorous testing process that combines system prompts, curated document repositories, and continuous feedback through a joint effort between technical and subject matter experts. This approach allows for the unique capabilities of each implementation of the project to be prioritized and creates a system of checks and balances along the way.

Parallel to this is one of the most critical processes of Signpost operations: knowing when to transition from AI to human oversight. The Signpost AI team has developed sophisticated protocols for identifying situations that require human intervention, particularly around mental health crises, medical emergencies, legal jeopardy, and other safety concerns. Risk detection protocols have been developed in collaboration with the multidisciplinary teams mentioned above—if a human caseworker would direct someone to a specific hotline for certain subjects, the AI system is programmed to follow similar escalation protocols.

AI's main utility lies in its 24/7 availability and speed. The team recognizes that routine queries often don't require human expertise—and such expertise often takes hours or days to access. This led to a strategic approach: reserving human intervention for sensitive cases where it's genuinely more valuable. The system includes "switchboard" options allowing users to request human contact, but funding realities mean longer wait times for urgent situations—a built-in paradox.

The system strives for transparency in handoffs, following the rule that handoffs only occur with user consent. Most handoffs take the form of providing links to appropriate service providers rather than direct transfers of conversation data. When handoffs are internal, conversation history is passed forward to human operators. When directing to external services, information is not shared—the system instead points users toward relevant resources.

These design choices reflect deeper commitments to user agency and data protection. Users are never forced to engage with humans if they do not wish to; instead, they receive the information needed to choose their next steps. This approach acknowledges a fundamental tension: the system can only provide information and hope necessary action is taken. Due to user protection and legal implications, it cannot force outcomes.

The care with which the Signpost team approaches sensitive data is a critical part of their operations. By directing users to appropriate services when AI assistance reaches its limits, the team can support navigation of the complex humanitarian aid sector while protecting vital personal information. The team acknowledges that this is not a perfect solution; they are actively iterating and working with both users, caseworkers, and those on the ground to figure out how to continue to move forward responsibly.

The Economics of Humanitarian AI: Cost and Scale

Understanding the cost structure of the Signpost AI system reveals both its potential and limitations. This depends heavily on the relationship between labor, time investment in building the system, and the number of users served.

Infrastructure costs are surprisingly modest: hosting the apps costs approximately $10,000 annually across millions of users. The primary variable cost comes from token usage—the number of nodes and tokens in each interaction determines the total cost. Currently, the team benefits from donations from AI providers and hasn't paid for tokens at the current scale, allowing them to offer the tools for free to implementing teams.

Telecommunications costs vary depending on the channel. Most social media platforms are free to use, but channels like phone calls or SMS come with per-message costs that vary by country and company. The ongoing Mexico pilot has a key deliverable to determine precise costs of operations and compare them with previous studies on human operator versus digital operator expenses.

In its current state, the technology is already highly useful and cost-effective. The greatest need is for as many deployments to be underway as possible to gather data for continued research and development. Capacity for launching novel AI deployments is however limited by engineering team capacity, given the demands of design, evaluation, and early refinement post-launch.

Looking Forward

The Signpost orchestration system is a thoughtful approach to deploying artificial intelligence in humanitarian contexts. By balancing technical capabilities with ethical considerations, privacy protections with service delivery, and AI efficiency with human expertise, the system offers a case study for how complex AI systems can be deployed responsibly in high-stakes environments.

The emphasis on transparency, user agency, and collaborative development suggests a mature approach to AI deployment, one acknowledging the potential and inherent limitations of current artificial intelligence technology. By leveraging this emerging technology for the aid sector in a thoughtful manner, the challenge of labor and resource shortages can be addressed meaningfully and rapidly.

As the system continues to evolve through pilot operations, its emphasis on user feedback, rigorous testing, and continuous improvement positions it to adapt to the changing needs of the various populations it serves. The challenge ahead lies not in adding more features, but in scaling proven approaches to reach more communities while maintaining the careful balance between technological capability and humanitarian responsibility.